Give Agents Autonomy. Give Humans Reason to Trust.

AI agents can perceive, reason, and act autonomously. That’s not the hard part anymore. The hard part is the space between what agents can do and what organizations are willing to let them do.

The Real Problem Isn’t Control — It’s Trust

Most conversations about AI agents focus on control: how do we constrain them, monitor them, limit what they can do? But control is the wrong frame. Control creates bottlenecks. Control means every agent action needs a human in the loop, which defeats the purpose of autonomy.

The real question is trust. How do you give agents enough autonomy to be useful — while giving humans enough visibility to be confident?

Without a framework, agents improvise. And humans can’t trust what they can’t see or predict. The result is either micromanagement (agents that need approval for everything) or anxiety (agents running unsupervised with no guardrails).

SOPs Are Guardrails, Not Process

Here’s the mental shift: SOPs aren’t process documents. They’re guardrails.

When you write an SOP with clear requirement levels — MUST, SHOULD, MAY — you’re not documenting steps. You’re encoding a trust contract between humans and agents.

For the agent:

- MUST = hard boundaries. Don’t cross these, ever.

- SHOULD = the expected approach, but adapt if context demands it.

- MAY = full autonomy. You decide how.

For the human:

- MUST = I know this always happens, no matter what.

- SHOULD = I know the default behavior, and I’ll see when it deviates.

- MAY = I’ve delegated this decision. I trust the outcome.

Same document. Two audiences. The agent reads it as an operating framework that defines where it has freedom. The human reads it as a trust contract that defines what they can rely on.

Governing AI agents is less like managing a factory floor and more like how a board of directors interacts with a CEO: set strategic direction, define decision-making boundaries, maintain oversight — but don’t prescribe every step.

Autonomy and Trust Scale Together

As we explored in the Economics for Agentic AI guide, organizations are shifting from upfront technology investments to pay-per-outcome models. This only works when agents can act autonomously — and when humans can trust the outcomes without watching every step.

MUST/SHOULD/MAY makes this a dial, not a switch. Start with more MUST steps and fewer MAY steps. As trust builds, convert SHOULD steps to MAY. The agent gains autonomy incrementally. The human’s trust framework stays intact throughout.

The question isn’t how do we control AI agents? It’s how do we give agents the autonomy to act — and give humans the framework to rely on?

Not every decision carries the same risk. Summarizing a meeting? Low stakes — let the agent run. Issuing a refund under $50? Moderate — follow the standard path, flag exceptions. Approving a six-figure contract or deleting production data? That’s a hard boundary. No agent crosses it without a human in the loop, period.

The MUST/SHOULD/MAY model makes this explicit. MUST steps are where the organization draws the line — the actions where the cost of getting it wrong is too high to tolerate. SHOULD steps are where you accept some variance because the risk is manageable. MAY steps are where the risk is low enough that autonomy is the right default.

This isn’t a one-time decision. Risk tolerance shifts as processes mature, as agents prove reliability, as the business context changes. The guardrails need to evolve with it.

And here’s what most teams get wrong: they let developers define those boundaries. But the person who knows when a refund requires manager approval isn’t the engineer — it’s the operations lead. The person who knows which compliance checks are non-negotiable isn’t the platform team — it’s the risk manager. Guardrails need to be written by the people who own the domain, in language they already use.

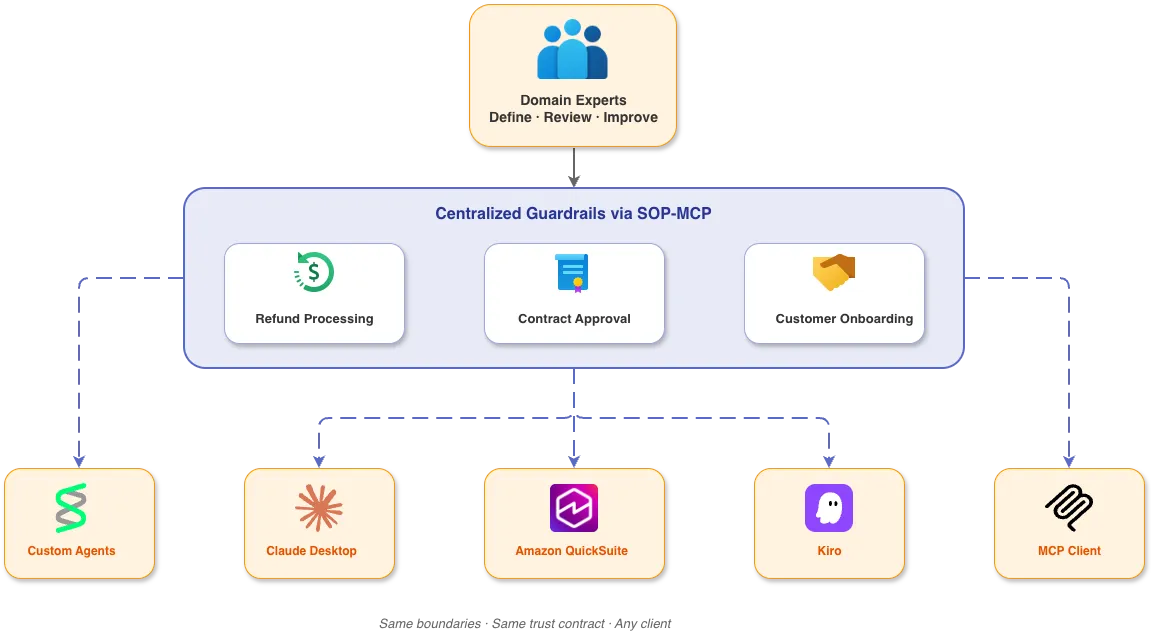

Write Once, Trust Everywhere

That’s also why guardrails should not live inside each agent. With SOP-MCP, agents can consume an SOP all at once or execute it step by step — and the same guardrails are available to any client that speaks MCP. A custom-built agent, Amazon QuickSuite, Claude Desktop, Kiro, Cursor — same boundaries, same trust contract, regardless of where the work happens.

Because SOPs are versioned and observable, they get better every time they run. Domain experts refine the guardrails. Every client benefits immediately. You’re not investing in a platform — you’re investing in your own business operation.

What happens when those processes start generating execution data, feedback loops, and organizational intelligence across the entire business? That’s where the real shift begins.